Header images from: Evangelion: 3.0 + 1.0 Thrice Upon a Time (left), The Tale of the Princess Kaguya (center) and The Wind Rises (right).

Camera Feature Recognition

What is the aim?

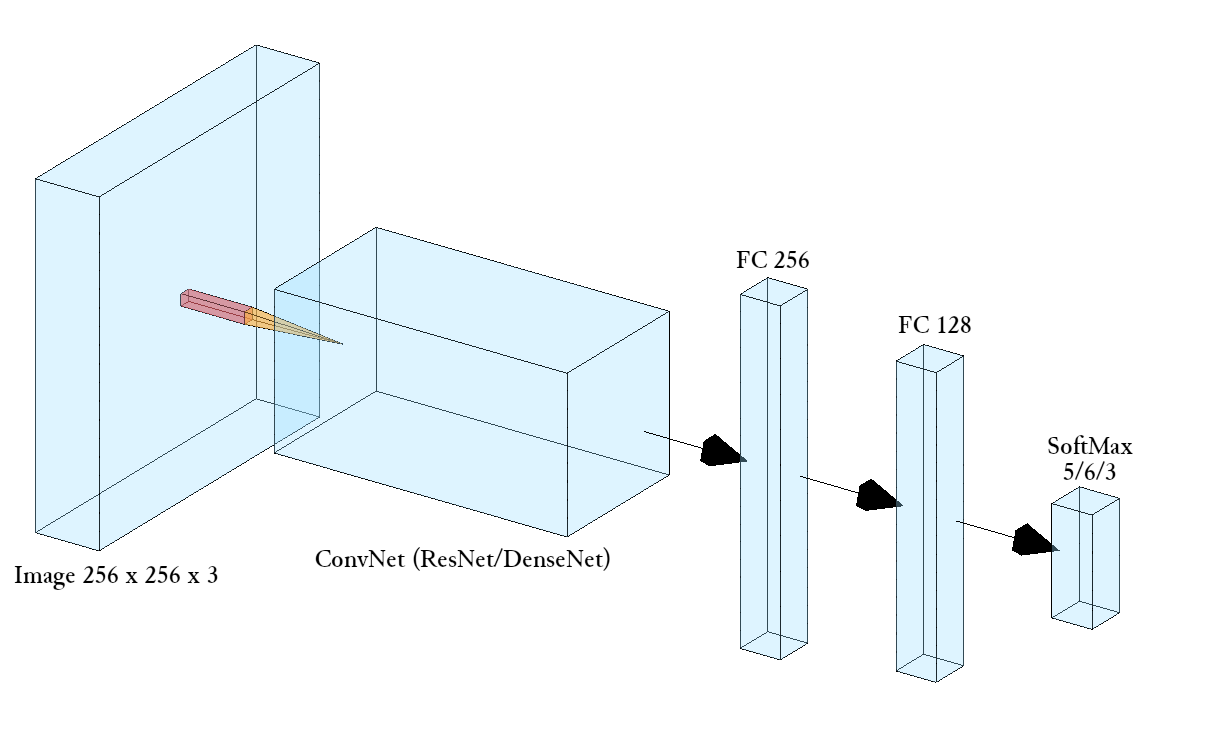

In this work we propose a new dataset of frames from popular Japanese animated films, and with this we fine-tune pre-trained Convolutional Neural Networks for the task of automatic classification of camera features. The developed models will be useful in conducting automated movie annotation for a wide range of applications, such as in stylistic analysis, video recommendation, and studies in media psychology.

Dataset

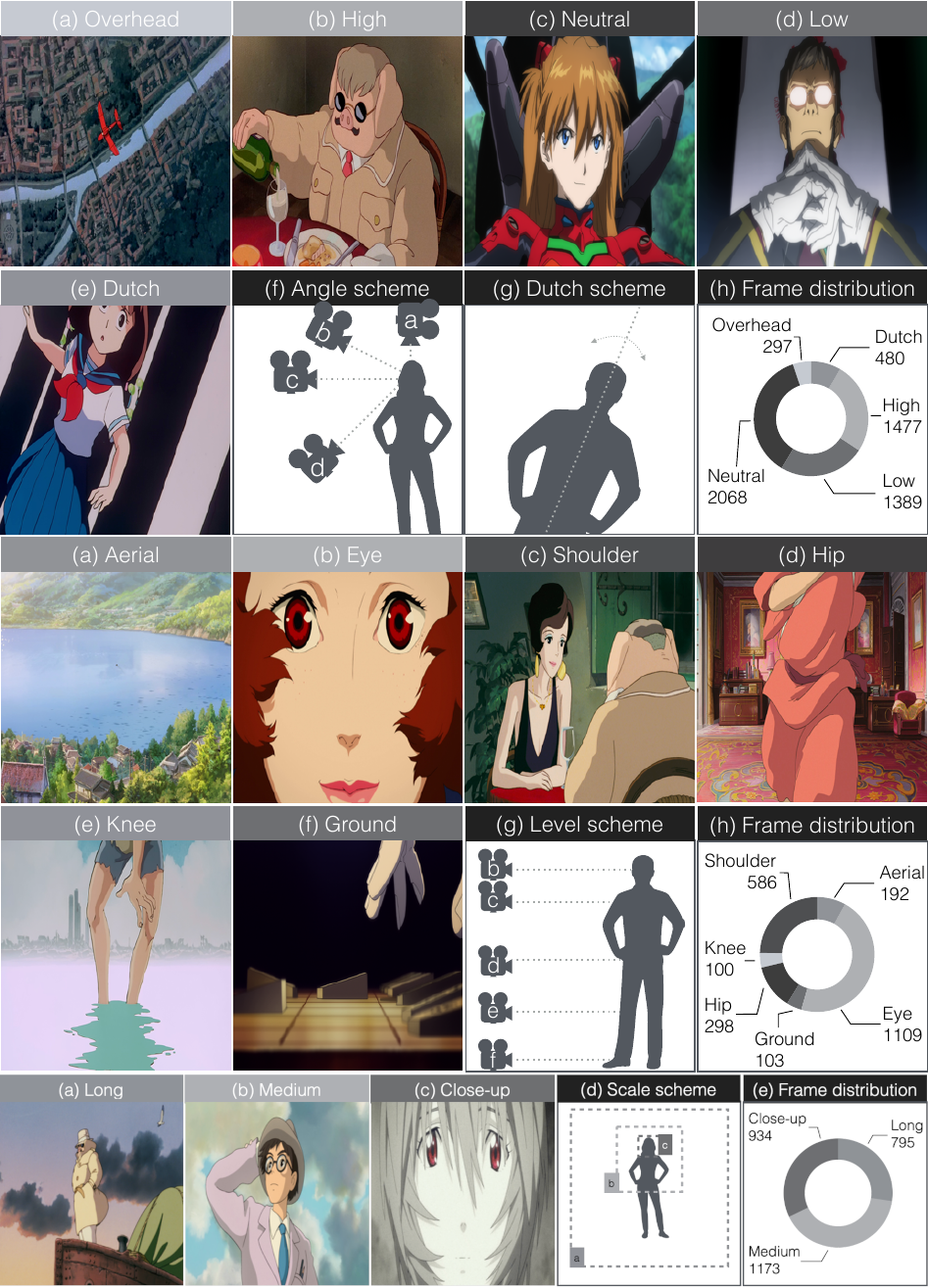

We collect a large dataset of shot frames (more than 17000) from animation movies directed by some of the most important directors of Japanese animation such as Hayao Miyazaki, Hideaki Anno and Mamoru Oshii and directed between 1982 and 2021. Each frame is manually annotated into the corresponding classes for camera angle (Overhead, High, Neutral, Low and Dutch), camera level (Aerial, Eye, Shoulder, Hip, Knee and Ground), and shot scale (Long, Medium and Close-up). Manual annotations on frames are provided by two independent coders, while a third person checks their coding and makes decisions in cases of disagreement.

Below a list of the movies used as sources for the annotated frames. We use twelve movies for training, except for Shot Scale where we use only three movies, considering the number of frames obtained sufficient for the task, while the other three are used for testing, split into the two Tables.

Movies Datasets (click to open)

| Director | Movie title | Year | Duration (minutes) | Annotated Frames | ||

|---|---|---|---|---|---|---|

| Camera Angle | Camera Level | Shot Scale | ||||

| Hideaki Anno | Evangelion: 1.11 You Are (Not) Alone | 2007 | 98 | 563 | 193 | - |

| Evangelion: 2.22 You Can (Not) Advance | 2009 | 108 | 601 | 255 | - | |

| Evangelion: 3.333 You Can (Not) Redo | 2012 | 96 | 460 | 219 | 1181 | |

| Mamoru Oshii | Urusei Yatsura 2: Beautiful Dreamer | 1984 | 101 | 439 | 159 | - |

| Ghost in the Shell | 1995 | 83 | 346 | 202 | 620 | |

| Hayao Miyazaki | Porco Rosso | 1992 | 102 | 387 | 226 | 1133 |

| Spirited Away | 2001 | 125 | 357 | 227 | - | |

| Howl's moving castle | 2004 | 119 | 865 | 255 | - | |

| Isao Takahata | The Tale of the Princess Kaguya | 2013 | 137 | 224 | 119 | - |

| Hiroyuki Imaishi | Promare | 2019 | 111 | 487 | 169 | - |

| Makoto Shinkai | Your Name. | 2016 | 112 | 430 | 219 | - |

| Satoshi Kon | Paprika | 2006 | 90 | 335 | 135 | - |

| Director | Movie title | Year | Duration (minutes) | Annotated Frames | ||

|---|---|---|---|---|---|---|

| Camera Angle | Camera Level | Shot Scale | ||||

| Hideaki Anno | Evangelion: 3.0+1.01 Thrice Upon A Time | 2021 | 155 | 1474 | 644 | 1289 |

| Hayao Miyazaki | The Wind Rises | 2013 | 126 | 981 | 385 | 839 |

| Tomoharu Katsumata | Arcadia of My Youth | 1982 | 130 | 493 | 353 | 546 |

| Camera features | Training | Testing |

|---|---|---|

| Camera Angle | 5494 | 2948 |

| Camera Level | 2388 | 1382 |

| Shot Scale | 2934 | 2674 |

Below the tree structure of the folders making up the datasets, which is identical for both the train dataset and the test dataset. Each frame file ({$code_name_movie}_{$num_frame}.png) is a PNG image of size 256 x 256.

Tree structure Datasets (click to open)

- train/test

- angle

- dutch

- dutch_frame_01

- dutch_frame_02

- ...

- high

- high_frame_01

- ...

- low

- neutral

- overhead

- dutch

- level

- aerial

- eye

- ground

- hip

- knee

- shoulder

- scale

- CS

- LS

- MS

- angle

Get the data

Please read the Research Use Agreement provided below.Dataset Research Use AgreementPremise: this project involves a set of activities aiming at AI-driven interpretation of cinematic data. The research activities are conducted by the Department of Information Engineering (DII) of the University of Brescia, Brescia, Italy (UniBS). The dataset is a collection of images and related data and metadata that is made accessible for Research use only, starting from this website and after acceptance of the following terms of use. By registering for downloads, you are agreeing to this: 1. Permission is granted to view and use the Dataset without charge for research purposes only. Its sale is prohibited. Any non-academic research use need to be evaluated case by case by the DII. If you intend to use this Dataset for any non-academic research use, you need to communicate it describing the intended use and receive approval by the DII. 2. In agreement with the mission of UniBS to promote the publication of scientific knowledge as open data, any computational model or algorithm that have used the Dataset and is publicly referenced (e.g. in a publication etc..) is suggested to be shared including the code and model weights and any case will give appropriate credit by correctly citing the AniFeature project scientific papers, but not in any way that suggests that UniBS endorses you or your use. 3. Other than the rights granted herein, UNIBS retains all rights, title, and interest in the Dataset. 4. You may make a verbatim copy of the "AniFeature Dataset" for uses as permitted in this Research Use Agreement. If another user within your organization wishes to use the Dataset, they must comply with all the terms of this Research Use Agreement. 5. YOU MAY NOT DISTRIBUTE, PUBLISH, OR REPRODUCE A COPY of any portion or all of the Dataset to others without specific prior written permission from the DII. 6. You must not modify, reverse engineer, decompile, or create derivative works from the Dataset. You must not remove or alter any copyright or other proprietary notices in the Dataset. 7. THE Dataset IS PROVIDED «AS IS,» AND UNIBS AND ELTE DO NOT MAKE ANY WARRANTY, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE, NOR DO THEY ASSUME ANY LIABILITY OR RESPONSIBILITY FOR THE USE OF THIS Dataset. 8. Any violation of this Research Use Agreement or other impermissible use shall be grounds for immediate termination of use of this Dataset. In the event that UniBS determines that the recipient has violated this Research Use Agreement or other impermissible use has been made, they may direct that the undersigned data recipient immediately return all copies of the Dataset and retain no copies thereof even if you did not cause the violation or impermissible use. 9. You agree to indemnify and hold UniBS harmless from any claims, losses or damages, including legal fees, arising out of or resulting from your use of the Dataset or your violation or role in violation of these Terms. You agree to fully cooperate in UniBS defense against any such claims.

Data Augmentation

The initially extracted dataset is somehow unbalanced for classes which are rarely employed, such as Overhead and Dutch (for camera angle), Aerial, Hip, Knee and Ground (for camera level). To lessen the effect of the imbalance on training dataset, more samples are artificially created through offline data augmentation: to compensate for low numerosity of Dutch shots, images belonging to Neutral camera angle are rotated by angles ranging from 10 to 30 degrees to generate 217 artificial Dutch frames. Besides the artificial generation of new images, we implement on-the-fly augmentation by operating both geometric (horizontal flip and a slight random rotation) and chromatic (b&w filters of varying intensity, swapping and pixel randomization of channels, and cutout regularization) transformations.

Results

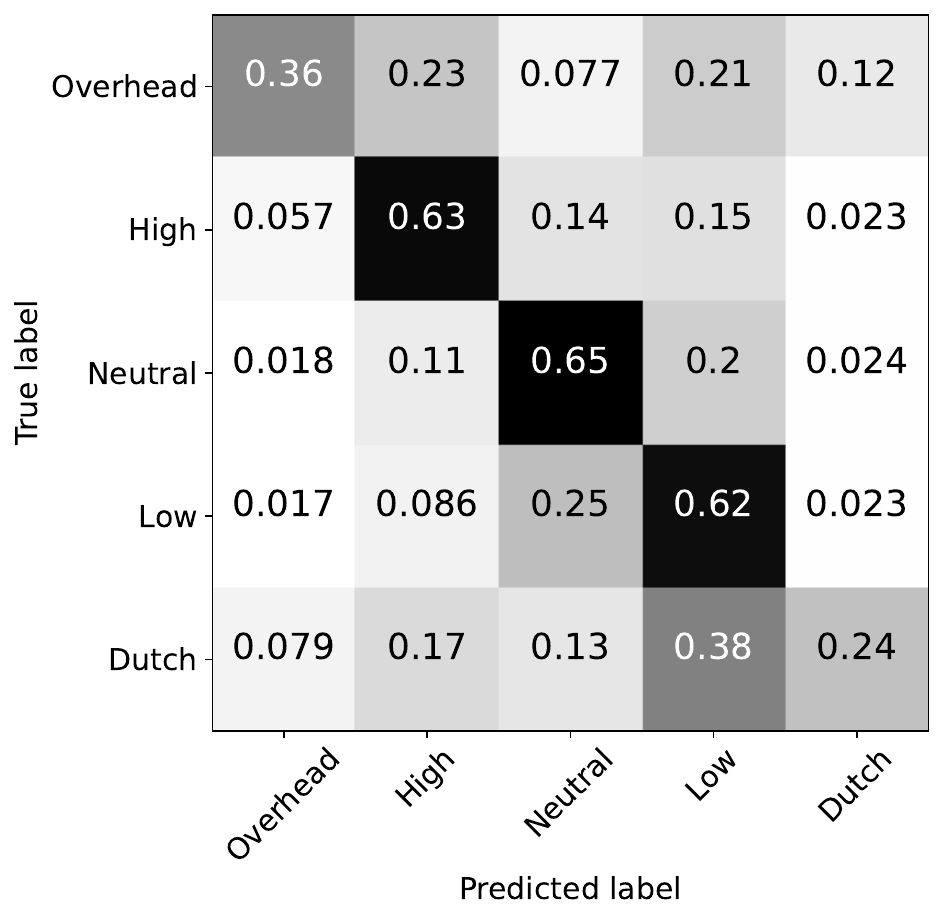

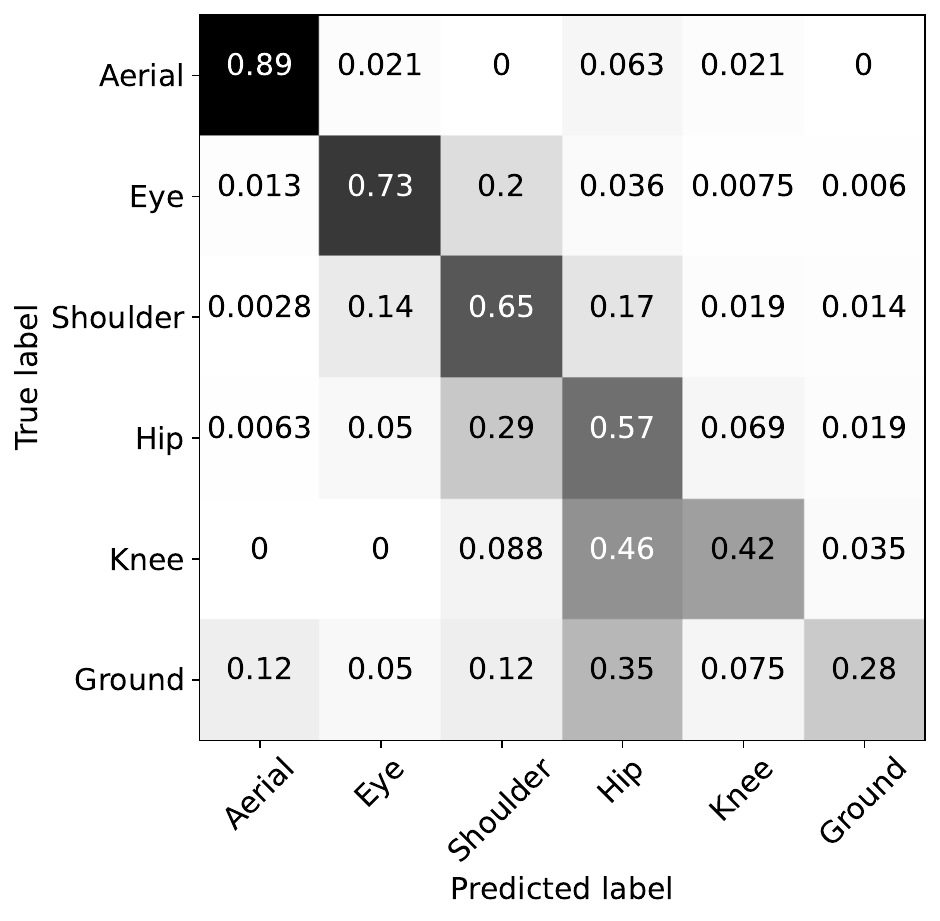

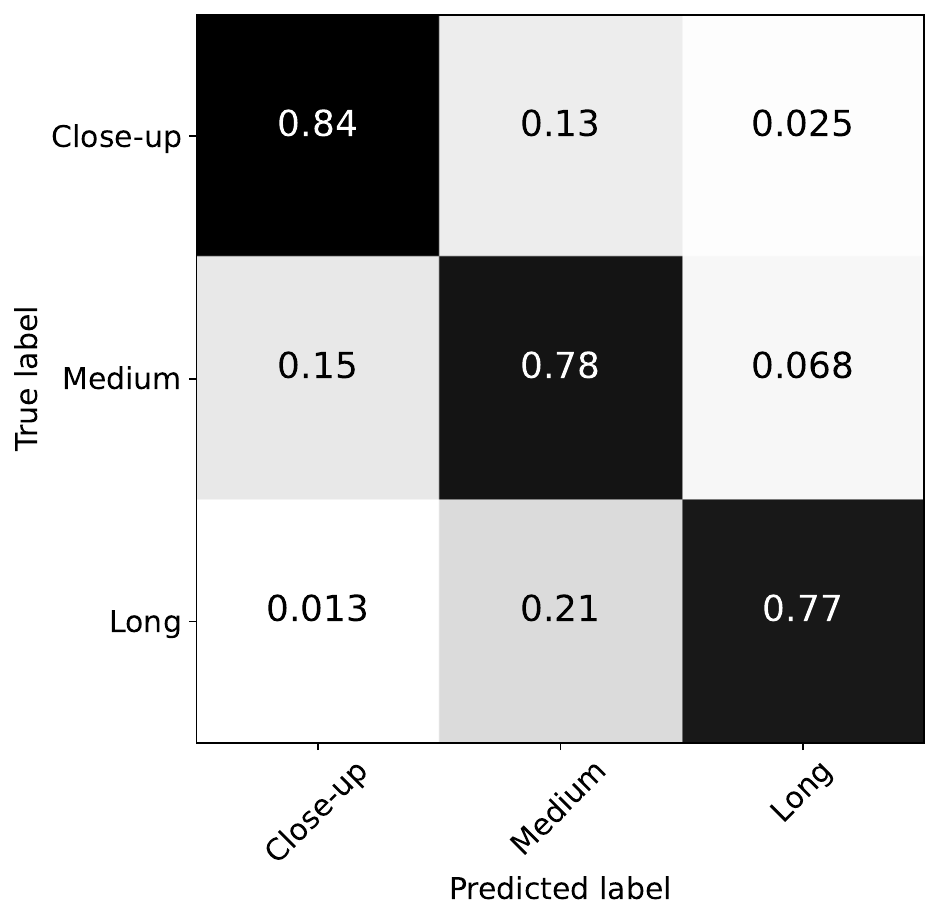

The matrices in the Figures represent the results obtained with the models trained with the training datasets and evaluated with the test datasets. The following F1-scores emerge from the results:

| Camera features | F1-macro | F1-micro | F1-weighted |

|---|---|---|---|

| Camera Angle | 0.49 | 0.59 | 0.80 |

| Camera Level | 0.61 | 0.68 | 0.80 |

| Shot Scale | 0.62 | 0.69 | 0.80 |

While the performance obtained on shot scale (F1 = 0.80) is comparable to state-of-the-art similar systems on live-action, we lack proper state-of-the-art systems to compare the obtained F1-Scores of 0.61 for camera angle and 0.68 for camera level. However, considering the vastness and heterogeneity of the data domain, the limited availability and variety of usable data, and the unbalanced nature of some classes, the results on camera level and angle can be considered satisfactory.

Error analysis

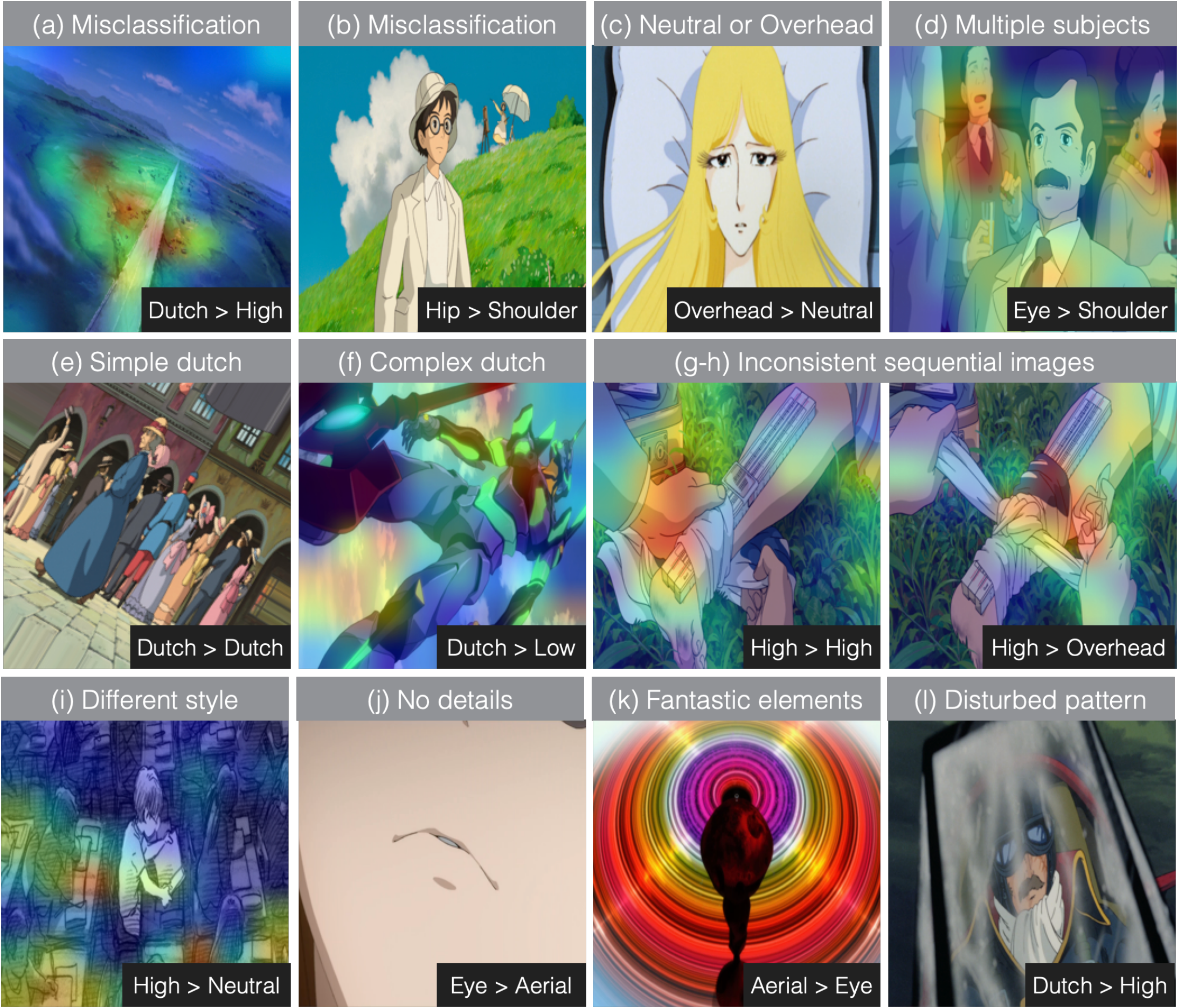

The main errors obtained are grouped in the figure. From top left, the hypotheses are:

- two misclassifications due to insufficiently robust models;

- inability to understand the context of the various scenes (in the example, is the girl standing or lying down?);

- the presence of multiple subjects (in Camera Level);

- bias generated by the fact that most training Dutch images are simple (many artificially obtained) but many test Dutch images are visually complex;

- uncoherent results on sequential frames;

- frames with unfamiliar style;

- frames shows not enough details;

- frames contains imaginary elements that have no equivalent in reality;

- frames are disturbed by atypical patterns.

In the Figures labels are represented as (GT value > Predicted value).

Get the demo and the models

Jupyter notebook Camera Features - Keras Models

Citations

For any use or reference to this project please cite the following papers.

@INPROCEEDINGS{anime22,

AUTHOR = {Gualandris, Gianluca and Savardi, Mattia and Signoroni, Alberto and Benini, Sergio},

TITLE = {Automatic indexing of virtual camera features from Japanese anime},

booktitle={Image Analysis and Processing. ICIAP 2022 Workshops: ICIAP International Workshops, Lecce, Italy, May 23--27, 2022, Revised Selected Papers, Part I},

pages={186--197},

year={2022},

organization={Springer}

}